‘I think Black Mirror got it right,’ says director Justin Krook. ‘Technology is a reflection of ourselves.’[1]Justin Krook, Machine post-screening Q&A, Melbourne International Film Festival, 16 August 2019. The filmmaker is speaking at a Q&A after the world premiere of his documentary Machine at the 2019 Melbourne International Film Festival, answering – alongside co-producer Michael Hilliard, co–executive producer Brian McGinn and artificial intelligence (AI) professor Toby Walsh – all the big questions duly raised by a movie peering into the near-future evolution of machine learning. Krook’s evocation of the acclaimed Netflix / Channel 4 anthology series is notable, given that his film recounts cases of life imitating art.

In Owen Harris’ classic 2013 Black Mirror episode ‘Be Right Back’, a young woman loses her husband in a car accident. To cope with her grief, she signs up for a service in which an algorithm mines all the emails and social-media posts of a late loved one and creates an AI persona that can communicate, via text, in their ‘voice’; the episode is a commentary not just on the grieving process, but on the broad acceptance, in the digital age, of ersatz approximations of human connection. Series creator Charlie Brooker, who wrote the episode, was inspired, while up late at night scrolling through Twitter, by the paranoid thought: ‘[W]hat if these people were dead and it was software emulating their thoughts?’[2]Charlie Brooker, quoted in Gabriel Tate, ‘Charlie Brooker and Hayley Atwell Discuss Black Mirror’, TimeOut, 31 January 2013, <https://www.timeout.com/london/tv-and-radio-guide/charlie-brooker-and-hayley-atwell-discuss-black-mirror>, accessed 11 October 2019.

In 2015, Eugenia Kuyda – whom we meet in Machine – lost her best friend, Roman Mazurenko, after he was struck by a car in San Francisco. In Silicon Valley, she had founded an AI start-up, Luka, that was programming bots for commercial purposes. After Mazurenko’s death, she found herself scrolling through their endless text-message threads and, inspired by Harris’ Black Mirror episode, wondered what would happen if she fed all these messages into a neural network.[3]Casey Newton, ‘Speak, Memory’, The Verge, 6 October 2016, <https://www.theverge.com/a/luka-artificial-intelligence-memorial-roman-mazurenko-bot>, accessed 11 October 2019. Within a year, the Luka app offered a Roman bot that users could interact with, mimicking Mazurenko’s tech-mediated use of language. In 2017, Kuyda then released Replika, an app through which users could customise personal bots and exchange messages with them.[4]See the Replika website, <https://replika.ai>, accessed 11 October 2019.

Within mere years, a satirical idea went from near-future parable to mundane online reality. This is how things go in the realm of machine learning, wherein the technological increases are rapid, the ramifications are only understood after the fact and old social norms are routinely obliterated. Machine examines the issues – and the people – on this technological cutting edge, cramming all manner of talking heads and big ideas into an accessible eighty-six-minute product. The people behind the production have previously worked with Netflix: Krook on a doc on EDM DJ Steve Aoki, I’ll Sleep When I’m Dead (2016), and McGinn and fellow executive producer David Gelb on the series Chef’s Table and Street Food. In turn, this movie they’ve collaborated on feels like prime fodder for streaming-service menus: an of-the-moment issue addressed in a bright, engaging but elementary feature.

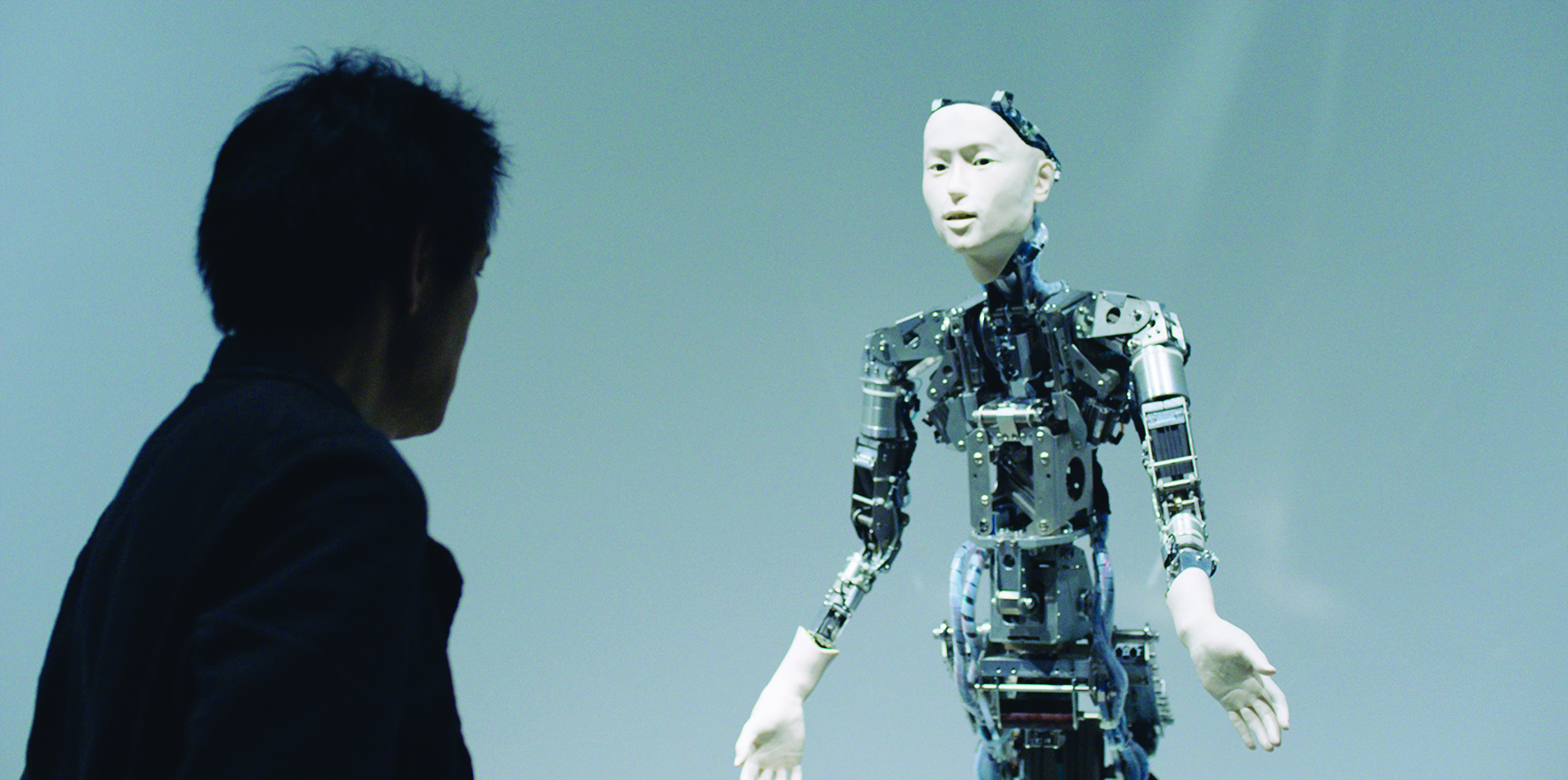

Machine is divided into seven chapters that serve as thematic bullet points. ‘Replicants’ introduces us to Kuyda and Replika, as well as to roboticists working on making androids and, of course, sexbots. ‘The AI Apocalypse’ discusses how, despite our panicky human fears, most AI creations are monofunctional and very limited. ‘The Driverless Dilemma’ tackles the push towards driverless cars, along with the positives and perils of their imminent arrival. ‘The Social Algorithm’ dissects social media’s ‘attention economy’ of clicks and targeted advertisements, touching on the death of journalism and the rise of fake news and deepfakes: artificially generated videos that can look real. ‘Autonomous Warfare’ is about the use of autonomous weapons – ethical horror shows whose zealots hope to remove ‘human error’ from war. ‘Human Machine’ looks at the technology melding bodies and bots, particularly through direct brain–computer interfaces. And ‘Super Intelligence’ is about the idea of ‘the singularity’ as well as our fears around the ‘runaway AI’ effect and losing the primacy of our species.

The tenor of this fear is set in an opening prologue that assembles contemporaneous news reports of a 2016 match between eighteen-time world Go champion Lee Se-dol and an AI, AlphaGo, developed by Google’s DeepMind program. With shades of the historic 1997 chess match between master Garry Kasparov and computer program Deep Blue, it’s a symbolic showdown. Lee rises to the occasion, saying in advance, ‘I believe that human intuition is still too advanced,’ and, ‘I’m going to do my best to protect human intelligence.’ After he, of course, loses in front of the press, he apologises for being ‘too weak’, and for letting humanity down.

Most movies tend to place robots in – or, more so, push them into – a grand, symbolic us-against-them position, tilting towards dystopian terror or revolutionary zeal. Machine takes the temperature on such an approach and finds it tepid. AI and robotics pioneer Rodney Brooks offers that, for all the advancements made in the field of machine learning, there’s ‘no progress in more general artificial intelligence at the moment’. Meaning: we can build machines to perform individual tasks, but not machines that can perform many tasks or teach themselves along the way. AlphaGo may’ve triumphed over Lee in a game it was built to play, but, if the rules were changed or the playing board were expanded, it couldn’t adapt on the fly, let alone manage to switch to playing chess or mahjong.

In fact, the danger isn’t from hyper-intelligent machines rising up to enslave us, Walsh clarifies, but from us overestimating the abilities of ‘stupid’ AI, giving it responsibilities way beyond its capabilities. ‘I’m very worried we’re going to get it wrong with all the other things that are going to change our lives with artificial intelligence,’ he says in the film.

One realm in which people are pushing recklessly ahead is with driverless cars, which are already being sent out into the wild. When we meet Miklós Kiss, the head of advance development for automated driving at Audi, we feel his own personal yearning for the automated commute – this yet more ‘wasted time’ that could be spent doing more work! Out on the German autobahn, automated driving systems can live up to this dream; with their 360-degree sensors, they even grasp things beyond humans’ field of vision. But Kiss suggests that, in a metropolis, with bikes, trams, people and pets, the inability to intuit the actions of others or react to chaotic or unexpected circumstances makes machine-driving a non-starter.

Which is to say nothing of the potential ethics of automated automobiles. Whereas those who proselytise driverless cars speak of the potential to limit casualties, the first death of a pedestrian by an autonomous driving system – in Tempe, Arizona, in 2018, by a self-driving Uber[5]Daisuke Wakabayashi, ‘Self-driving Uber Car Kills Pedestrian in Arizona, Where Robots Roam’, The New York Times, 19 March 2018, <https://www.nytimes.com/2018/03/19/technology/uber-driverless-fatality.html>, accessed 11 October 2019. – has led to attendant hue and cry. Iyad Rahwan, co-creator of Massachusetts Institute of Technology’s Moral Machine lab, invokes a philosophical riddle called the ‘trolley problem’ as it relates to giving machines the choice to prioritise the safety of pedestrians over the safety of the passenger – to, essentially, think of the greater good over the welfare of the individual. Rahwan ‘led a team that crowdsourced 40 million decisions from people worldwide about the ethics of autonomous vehicles’,[6]‘Iyad Rahwan’, in ‘The Experts’, Machine official website, <https://machine.movie/#the-experts>, accessed 11 October 2019. and most people surveyed claim that minimising harm to all should take precedence, while also admitting that they wouldn’t want to buy a car programmed in such a fashion. ‘There’s this mismatch between what people want for society,’ Rahwan offers, ‘and what they’re willing to contribute themselves.’

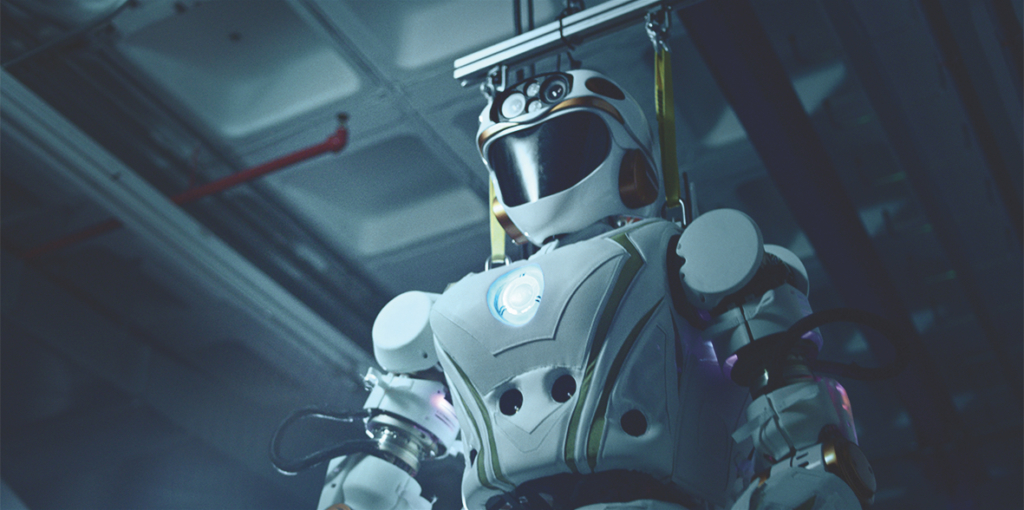

An even stickier ethical quandary – or, perhaps, quagmire – comes with the development of autonomous weapons. Forget drone warfare, which is already entrenched in modern-day combat; automated gun turrets have been present in the Korean Demilitarised Zone since 2010,[7]Aaron Saenz, ‘Armed Robots Deployed by South Korea in Demilitarized Zone’, Singularity Hub, 25 July 2010, <https://singularityhub.com/2010/07/25/armed-robots-deployed-by-south-korea-in-demilitarized-zone-on-trial-basis/>, accessed 11 October 2019. and an AI-piloted navy fighter had already taken off and landed from an aircraft carrier as of 2013.[8]Brandon Vinson, ‘X-47B Makes First Arrested Landing at Sea’, US Navy website, 10 July 2013, <https://www.navy.mil/submit/display.asp?story_id=75298>, accessed 11 October 2019. In such a landscape, the introduction of killer robots seems wholly plausible. If there is a ‘villain’ in Machine, it’s autonomous-weapons acolyte Missy Cummings, a former US Navy fighter pilot turned director of the Humans and Autonomy Lab at Duke University. It’s her belief that ‘using autonomous weapons could potentially make war as safe as one could possibly make it’. Yet, in arguing for something to remove ‘human error’ from conflict, she essentially removes the humanity from war – not just from those who fight in it, but from those victimised by it.

While Krook largely avoids fearmongering, the most terrifying moment in Machine comes from a bit of provocative juxtaposition: the bellicose proclamations of US President Donald Trump and Russian President Vladimir Putin (‘Whoever becomes the leader in [AI] will become the ruler of the world,’ pronounces the latter) leading to a montage of real dead and wounded bodies, the casualties of drone strokes and bomb blasts. The United Nations (UN) Convention on Certain Conventional Weapons is, fortunately, discussing these ethical issues,[9]Sono Motoyama, ‘Inside the United Nations’ Effort to Regulate Autonomous Killer Robots’, The Verge, 27 August 2018, <https://www.theverge.com/2018/8/27/17786080/united-nations-un-autonomous-killer-robots-regulation-conference>, accessed 11 October 2019. and, in 2019, UN Secretary-General António Guterres lobbied for autonomous machines ‘with the power and discretion to select targets and take lives’ to be ‘prohibited by international law’[10]António Guterres, quoted in ‘Autonomous Weapons That Kill Must Be Banned, Insists UN Chief’, UN News, 25 March 2019, <https://news.un.org/en/story/2019/03/1035381>, accessed 11 October 2019. – echoing a popular push embodied by, for example, the Elon Musk–backed Campaign to Stop Killer Robots.[11]See the Campaign to Stop Killer Robots website, <https://www.stopkillerrobots.org>, accessed 11 October 2019.

‘I don’t think we appreciate how much nuance goes into our value system,’ says tech writer Tim Urban. He’s not speaking about the perils of autonomous weapons or self-driving cars, but about the entire AI paradigm. It’s one of several lines in the film that feel pleasingly sweeping. ‘When someone interacts with an AI, it does reveal things about yourself. It’s a sort of mirror,’ says sex roboticist Matt McMullen. ‘What will happen if androids become so advanced they are indistinguishable from humans?’ poses Hiroshi Ishiguro, roboticist at Advanced Telecommunications Research Institute International. ‘If someone can have access to your thoughts,’ wonders neurosurgeon Eric Leuthardt, about brain–technology interfaces, ‘how can that be used to manipulate you? What happens when a corporation gets involved, and [it] now [has] large aggregates of human thoughts and data?’ These are the kinds of big thoughts that inspire whole movies – and much speculative fiction – but, in Krook’s film, the questions are asked, left to momentarily linger, then quickly moved on from. Machine’s big-picture overview, its need to briskly navigate through a host of subjects, makes it a great primer and an accessible documentary. But there are many times when it pushes on to the next topic just as the conversation is getting interesting.

That’s especially notable in ‘The Social Algorithm’, which feels like the most trenchant topic within the documentary’s many issues. Addressing such a subject, Machine presents us not with talking heads prognosticating future possibilities, but with people speaking about the contemporary climate we’re in. Rather than engaging with the theoretical, this is a conversation about the practical: interacting with bots and machine learning via social-media feeds is already a daily occurrence for modern metropolitan citizens. While the topic doesn’t have the sexy allure of killer robots, these systems are, by now, ushering in a very real dystopia. Facebook, as journalist Mark Little offers in the film, is now ‘the most dominant news-distribution platform in the history of humanity’, yet it does little to filter out fake news or crack conspiracy-theorising and the espousal of dangerous propaganda (like climate denial) under the guise of information. It marks, he says, ‘the systematic pollution of the world’s information supplies’. And the recent arrival of deepfakes means that, soon, social-media scrollers will fall victim to ‘entirely synthetic approximations of reality’ used to further agendas and delude viewers.

Machine presents us not with talking heads prognosticating future possibilities, but with people speaking about the contemporary climate we’re in.

Facebook algorithms are amoral at best, immoral at worst – coded only to generate clicks, trapping users so as to exploit their focus, which is the currency of the attention economy behind the ‘feed’. All of this is in service of the corporate behemoths essentially bankrolling the operation: targeted advertising as the capitalist output of an all-seeing surveillance organ. Private data is, in Facebook’s hands, less private for individuals, more data to be sold to corporate entities to use as they please, even if that intentionally undermines democracy. ‘We used to buy products; now we are the products,’ spits Little, likening our attention to oil: a resource to be mined. The easiest way to get people’s attention is to cynically prey on the base emotions of anger and sadness, the machinery of clicks lubricated by equal doses of outrage and pity.[12]For more on Facebook and the ‘attention economy’, see Tobias Rose-Stockwell, ‘This Is How Your Fear and Outrage Are Being Sold for Profit’, Quartz, 29 July 2017, <https://qz.com/1039910/how-facebooks-news-feed-algorithm-sells-our-fear-and-outrage-for-profit/>, accessed 11 October 2019.

In Machine, this moment-defining subject is just another big idea tossed into the mix in a fashion that feels a little perfunctory. Admittedly, the existence of contemporaneous documentaries that burrow deeper into this thematic terrain – like The Great Hack (Karim Amer & Jehane Noujaim, 2019), which chronicles the Cambridge Analytica scandal,[13]For more on the Cambridge Analytica scandal, see the BBC’s coverage at <https://www.bbc.com/news/topics/c81zyn0888lt/facebook-cambridge-analytica-scandal>, accessed 11 October 2019. and the citizen-journalist shrine Bellingcat: Truth in a Post-truth World (Hans Pool, 2018) – is what brings this feeling into relief. Those documentaries address more discrete stories, whereas Machine’s brief is to survey a plurality of them. It seeks to function as a kind of entry-level text, a documentary primer to prompt further research: more a light conversation-starter than a sustained, self-contained lecture. Krook’s evocation of Black Mirror is one of idealism, drawing a comparison to one of TV’s most acclaimed series. But it shows an awareness of what Machine is, too: not an exhaustive exploration of a subject, but an easily accessible entertainment.

Endnotes

| 1 | Justin Krook, Machine post-screening Q&A, Melbourne International Film Festival, 16 August 2019. |

|---|---|

| 2 | Charlie Brooker, quoted in Gabriel Tate, ‘Charlie Brooker and Hayley Atwell Discuss Black Mirror’, TimeOut, 31 January 2013, <https://www.timeout.com/london/tv-and-radio-guide/charlie-brooker-and-hayley-atwell-discuss-black-mirror>, accessed 11 October 2019. |

| 3 | Casey Newton, ‘Speak, Memory’, The Verge, 6 October 2016, <https://www.theverge.com/a/luka-artificial-intelligence-memorial-roman-mazurenko-bot>, accessed 11 October 2019. |

| 4 | See the Replika website, <https://replika.ai>, accessed 11 October 2019. |

| 5 | Daisuke Wakabayashi, ‘Self-driving Uber Car Kills Pedestrian in Arizona, Where Robots Roam’, The New York Times, 19 March 2018, <https://www.nytimes.com/2018/03/19/technology/uber-driverless-fatality.html>, accessed 11 October 2019. |

| 6 | ‘Iyad Rahwan’, in ‘The Experts’, Machine official website, <https://machine.movie/#the-experts>, accessed 11 October 2019. |

| 7 | Aaron Saenz, ‘Armed Robots Deployed by South Korea in Demilitarized Zone’, Singularity Hub, 25 July 2010, <https://singularityhub.com/2010/07/25/armed-robots-deployed-by-south-korea-in-demilitarized-zone-on-trial-basis/>, accessed 11 October 2019. |

| 8 | Brandon Vinson, ‘X-47B Makes First Arrested Landing at Sea’, US Navy website, 10 July 2013, <https://www.navy.mil/submit/display.asp?story_id=75298>, accessed 11 October 2019. |

| 9 | Sono Motoyama, ‘Inside the United Nations’ Effort to Regulate Autonomous Killer Robots’, The Verge, 27 August 2018, <https://www.theverge.com/2018/8/27/17786080/united-nations-un-autonomous-killer-robots-regulation-conference>, accessed 11 October 2019. |

| 10 | António Guterres, quoted in ‘Autonomous Weapons That Kill Must Be Banned, Insists UN Chief’, UN News, 25 March 2019, <https://news.un.org/en/story/2019/03/1035381>, accessed 11 October 2019. |

| 11 | See the Campaign to Stop Killer Robots website, <https://www.stopkillerrobots.org>, accessed 11 October 2019. |

| 12 | For more on Facebook and the ‘attention economy’, see Tobias Rose-Stockwell, ‘This Is How Your Fear and Outrage Are Being Sold for Profit’, Quartz, 29 July 2017, <https://qz.com/1039910/how-facebooks-news-feed-algorithm-sells-our-fear-and-outrage-for-profit/>, accessed 11 October 2019. |

| 13 | For more on the Cambridge Analytica scandal, see the BBC’s coverage at <https://www.bbc.com/news/topics/c81zyn0888lt/facebook-cambridge-analytica-scandal>, accessed 11 October 2019. |